Studying Time: 10 minutes

Why Is Actual-time Information Ingestion Vital for B2C Manufacturers?

MoEngage is an enterprise SaaS enterprise that helps B2C manufacturers collect helpful insights from their buyer’s conduct and use these insights to arrange environment friendly engagement and retention campaigns.

As these campaigns and insights are primarily based on the top customers’ interactions (shoppers), essentially the most essential requirement is to have the ability to ingest all of this demographic and interplay information and make it obtainable to numerous inside providers in a well timed vogue.

Because the enterprise has grown during the last years, we’ve been lucky to have the ability to assist prospects internationally to attain their advertising and marketing targets. With companies turning into more and more digitized, our prospects themselves have seen progress of their buyer base at an exponential price. They’ve now come to count on extremely responsive purposes, which might solely be doable if their information could be ingested in real-time.

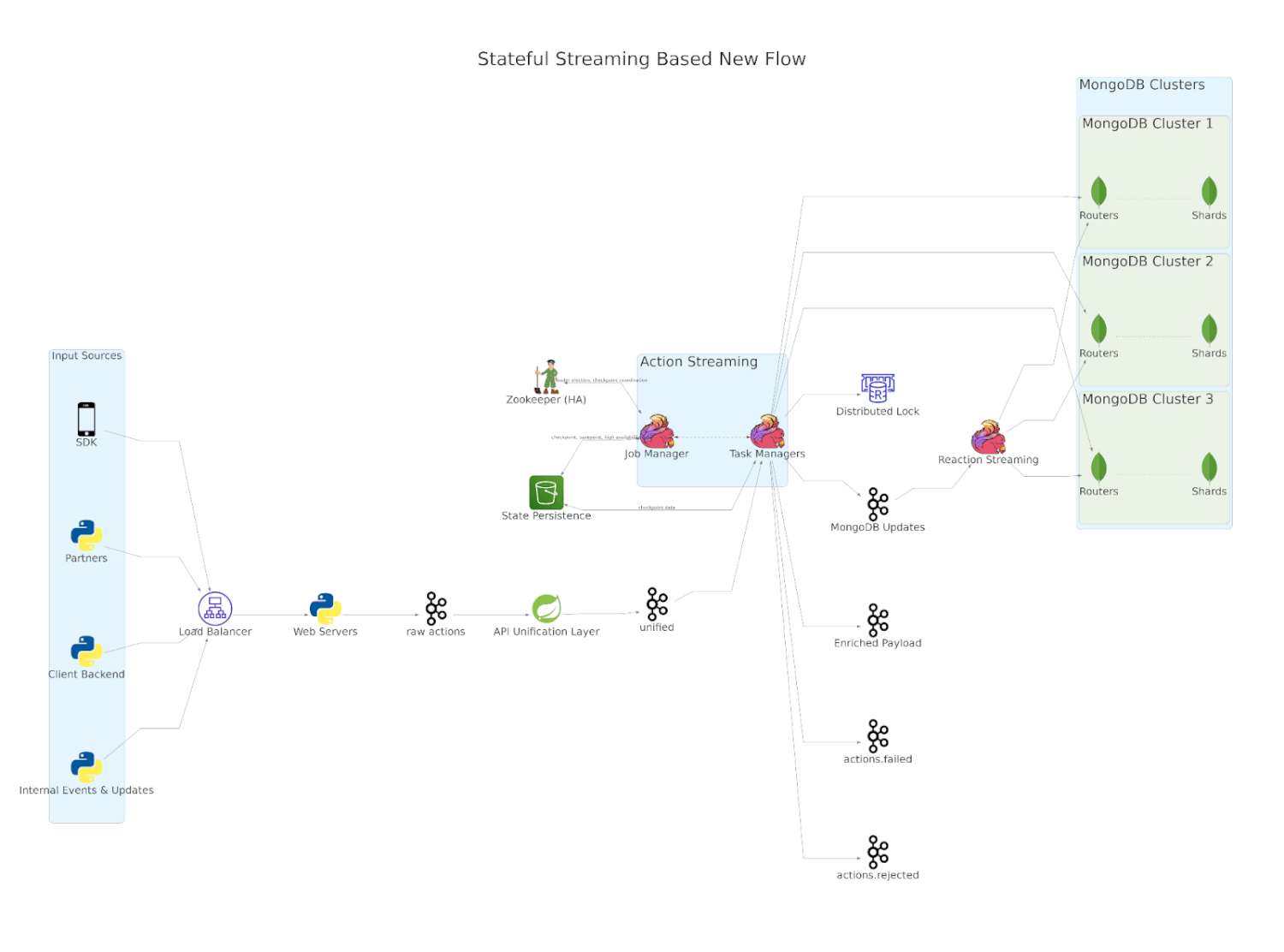

Overview of MoEngage’s Information Ingestion Structure

When ingesting information, the database turns into the most important bottleneck. MoEngage makes use of MongoDB for many of its database use circumstances. Whereas some databases can help increased write throughput, they’re unable to help querying utilizing numerous filters as they’re primarily key-value shops. We’ve spent appreciable time fine-tuning our clusters. Indexing, sharding, and occasion right-sizing are among the many many optimizations we’ve in place. Nevertheless, these aren’t sufficient to make sure real-time ingestion. Thus, our purposes have to be designed as a way to obtain a bigger scale of reads and writes.

What high-level method did we take to resolve this?

One of many strategies that may dramatically enhance the size of writes is using bulk writes. Nevertheless, utilizing it’s difficult as any shopper’s exercise is approaching the fly, and processing its information requires entry to its newest state for us to have the ability to do constant updates. Thus to have the ability to leverage it, we want a messaging layer that enables partitioning information in a approach that any given shopper’s information would all the time get processed by just one information processor. To try this, in addition to obtain our purpose of ordered information processing, we determined to go for Kafka as a pub-sub layer. Kafka is a well known pub-sub layer that, amongst different issues, helps key options akin to transactions, excessive throughput, persistent ordering, horizontal scalability, and schema registry which are important to our capability to scale and evolve our use circumstances.

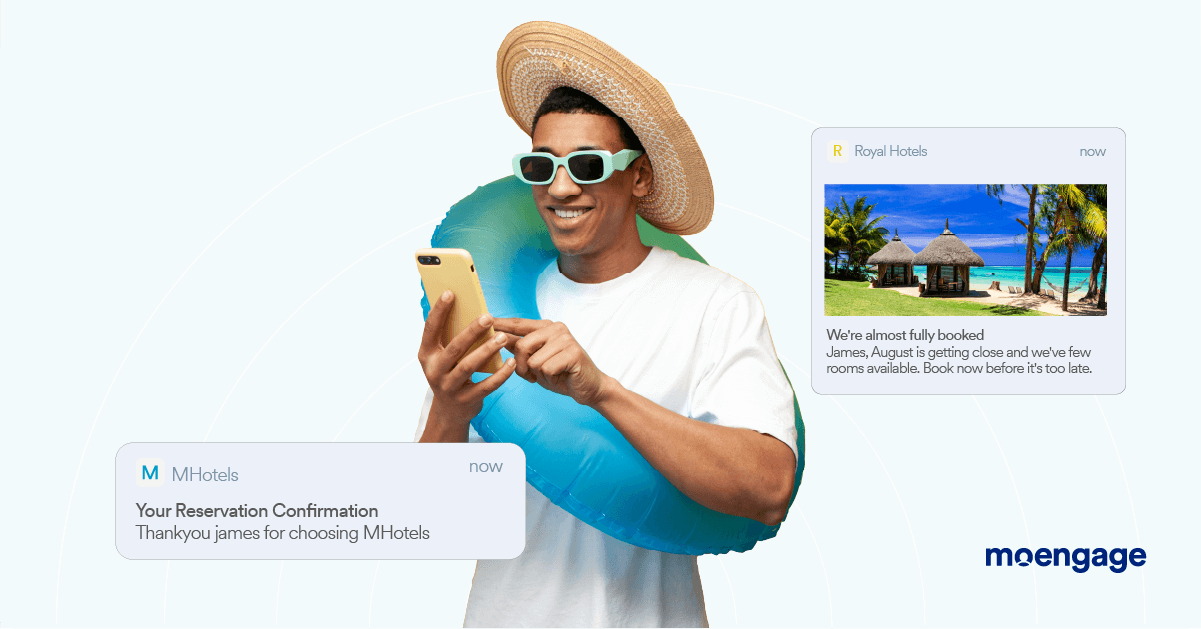

MoEngage’s real-time information ingestion helps manufacturers ship personalised messages to their prospects on the proper time

The following little bit of perception was that as a way to leverage bulk writes, quite than utilizing the database as a supply of reality, we wanted a quick caching layer as a supply of reality, permitting us to replace our databases in bulk. Our expertise with DynamoDB & ElastiCache (redis) taught us that this could be prohibitively costly. Because of this, the caching layer that we might use must be an in-memory cache. This may not solely decrease the price of operating the cache however would result in massive features in efficiency as effectively. Probably the most distinguished key-value retailer for this use case is RocksDB which might leverage each the reminiscence of an occasion in addition to its disk ought to the quantity of knowledge saved overflow the reminiscence.

Our resolution to make use of RocksDB and Kafka introduces new challenges as what was once a stateless system would now turn out to be a stateful software. Firstly, the dimensions of this RocksDB cache could be within the order of lots of of gigabytes per deployment, and the applying leveraging it may restart as a consequence of numerous causes – new function releases, occasion termination by the cloud supplier, and stability points with the applying itself. The one method to reliably run our software could be to trace the offsets at which we final learn information from Kafka and maintain that in alignment with the state of the contents of our cache. This aggregated state would have to be continued externally to permit for restoration throughout deliberate or unplanned restarts. Above all, we would want a excessive stage of configurability for this complete checkpoint course of (frequency, the hole between checkpoints, concurrent checkpoints, and many others.). Fairly than constructing the whole resolution in-house, it was extra prudent to leverage present frameworks as they’d have higher efficiency, reliability and neighborhood help. We evaluated numerous streaming frameworks and concluded that Apache Flink could be the one with all of the options and the specified efficiency at our scale. At a excessive stage, a flink job consists of a number of job managers who’re answerable for executing numerous operators that implement the info processing necessities of the applying. The job of allocating duties to job managers, monitoring their well being, and triggering checkpoints is dealt with by a separate set of processes referred to as job managers. As soon as the duty managers resume information processing, any consumer state will get saved in a finely tuned RocksDB storage engine which will get periodically checkpointed to S3 and Zookeeper as a way to facilitate sleek restarts.

How did we put all of it collectively?

After determining the best language, framework, and messaging layers, the time got here to start out constructing out the system and migrating all our present options. Our ingestion layer consists of 4 steps:

- Information validation layer that intercepts buyer information by way of numerous sources

- Inside schema administration and limits enforcement for all of the consumer, gadget, and occasions and their properties which are tracked throughout prospects in addition to customer-specific properties

- Utilizing the identifiers within the incoming requests to fetch, probably create and at last replace the state of customers and their units

- Enriching the incoming interactions that have been carried out by an finish consumer with particulars concerning the consumer that we internally retailer about them and making them obtainable to different providers

API Unification Layer

As information validation and schema administration aren’t actually tied to any specific consumer however quite to a shopper, we determined to carve these options out as a devoted service. Moreover, as we talked about earlier, information can come from numerous sources, together with cellular SDKs that we offer, information API to publish the identical by way of the shoppers’ backend, third-party companions akin to Section, advert attribution providers, CSV recordsdata, and inside APIs. As every of those was concentrating on totally different use circumstances, over time, the implementations for ingesting information throughout these sources had diverged regardless that the last word purpose was to replace the state of customers and their units. We took this chance to consolidate the conduct throughout sources inside this information validation layer and remodel every of those inputs into one consolidated output stream that might function enter to providers that implement the remainder of the performance.

Motion Streaming

Probably the most essential service is the one which offers with consumer & gadget creation in addition to occasion processing. With information validation and API variations taken care of within the upstream layer, this service depends on the identifiers of customers and units within the consolidated payload to find out what consumer and gadget which may have been concerned, which could generally contain creating their entries and on different events contain merging and even elimination of present paperwork. The latter can occur as a result of, in our enterprise area, each customers and units can have a number of identifiers, and there’s no single identifier for both that’s leveraged by all enter information sources. As soon as the entities are resolved, the subsequent part of this flink job is to course of all of the occasions inside the payload, the processing of which can lead to a change within the state of the consumer or the gadget concerned. Fairly than updating their states straight, it determines the change in state and publishes them to Kafka for use by one other downstream service to replace entities in bulk. We’re in a position to decide the change in state because the job depends on RocksDB because the supply of reality. Thus RocksDB not solely helps us lower down our database reads by greater than half, however extra importantly, it permits us to leverage bulk writes to databases.

Response Streaming

The ultimate service in our pipeline is a comparatively easy service that consumes MongoDB replace requests from Kafka and applies them in bulk, thereby vastly growing the write throughput of our database clusters. With RocksDB serving as a supply of reality, we are able to leverage full non-blocking and asynchronous I/O to do our updates which helps us vastly enhance our effectivity of writes. Not solely will we do extra writing, however we’re in a position to do them with far fewer assets! We did need to spend a while constructing a buffering mechanism that ensures that any entity has just one replace in-flight at any given time, with out which the order of write operations can by no means be assured.

MoEngage’s real-time ingestion infrastructure helps manufacturers drive extra ROI from their engagement, retention, and monetization campaigns

Fault tolerance of our system

Splitting our ingestion layer into three totally different jobs helped us obtain the effectivity that we wished, however this got here at the price of better probabilities of failure. Any one of many providers may go down as a consequence of a change in code or stability points inside our cloud. Checkpoints will help us keep away from re-processing all the information in our messaging layers, but it surely doesn’t get rid of the prospect of duplicate information processing. For this reason it was essential to make sure that every service was idempotent.

Response streaming was designed to help solely a choose set of write operations – set, unset, including to a set, and eradicating from a set. Any shopper intending to make use of this service would want to leverage a number of of those operations. This set of 4 operations has one factor in widespread – the repeated software of any of those on a doc will in the end produce the identical outcome.

API Unification Layer & Motion Streaming each depend on Kafka transactions to make sure that even when information will get processed a number of occasions, it isn’t made obtainable to downstream providers till the checkpoint completes. Care can also be taken to make sure that all time-based properties have secure values regardless of restarts and making certain that no file older than these occasions ever will get re-processed.

Deployments & configuration

Our system is designed to have the ability to run each as containerized purposes in Kubernetes in addition to on cloud present digital machines, which MoEngage has traditionally relied on. That is to make sure enterprise continuity whereas all of the kinks of our Kubernetes setup get sorted out, in addition to all engineers have a adequate understanding of it. The flexibility to spin up containers in milliseconds can’t be matched by digital machines. Kubernetes manifests for workloads internationally are managed utilizing customise, which makes it simple to keep away from any form of configuration duplication. Deployments exterior of Kubernetes are managed utilizing Terraform, Terragrunt, and CodeDeploy with in-house enhancements to make it simple to spin up new deployments, whereas configurations are managed utilizing Consul. We use HOCON because the format for configuration as they permit for the simple composition of a number of configuration recordsdata into one, thereby permitting us to interrupt configuration into small reusable chunks that can be utilized throughout deployments and for a number of providers, making it simple to make large-scale modifications in configurations. It additionally offers the power to offer configurations by way of items, eradicating any form of ambiguity within the worth of a configuration.

Learnings and Key Takeaways

Scala – Java interoperability

We carried out our system by leveraging the precept of layered structure – enterprise logic utterly freed from any infrastructure dependencies, a service layer that interacts with exterior programs and invokes the enterprise logic, and at last, the splitting of this service throughout numerous Flink operators tied collectively by a job graph. Enterprise logic was carried out in Java as we felt that hiring or coaching builders in Java could be simpler whereas the comparatively static parts of the system have been written in Scala in order to leverage the advantages of Scala’s sort system, capability to compose capabilities, error dealing with capabilities, and light-weight syntax. Nevertheless, this resolution proved to be a design blunder as we couldn’t totally leverage the most effective capabilities of both language.

Had we written our code totally in Scala, we may have:

- Leveraged property-based testing together with refined sorts to considerably scale back the burden of testing the whole codebase

- Leveraged an impact system akin to ZIO/Cats Impact as a substitute of working with vanilla Scala Future and Strive, which is commonly more durable to check

- Not have needed to take care of generally encountered exceptions in Java by leveraging Scala’s superior sort system

Had we written our code totally in Java, we might have:

- Leveraged the SpringBoot ecosystem to construct out our service layers

- Averted express separation of area and persistence fashions as Java lacks libraries to mechanically convert one to a different, and Scala libraries don’t all the time work with Java POJOs

Working Flink jobs is extra work than we thought

Whereas Flink does provide nice options that do certainly work at our scale, its function set is commonly missing in numerous elements, which ends up in extra developer effort than deliberate. A few of these weaknesses aren’t typically effectively documented, if in any respect, whereas some options require fairly a little bit of experimentation to get issues proper

- It’s fairly widespread for numerous Flink libraries to interrupt backward compatibility, which forces builders to continuously rework their code in the event that they want to leverage newer options

- Flink additionally helps numerous helpful options akin to auto-scaling, precisely as soon as semantics, and checkpoints which require a variety of experimenting to get proper with little steering on selecting methods to decide the best set of configurations. That stated, the neighborhood may be very, very responsive, and we’re grateful for his or her assist in our journey

- Integration testing of flink jobs is nearly unimaginable, given its resource-intensive nature. Unit testing is feasible however is quite cumbersome. We might recommend builders maintain virtually no logic within the Flink operators themselves and merely depend on them for information partitioning and state administration

- Schema evolution doesn’t work when the lessons leverage generics, which is sort of all the time the case. This compelled us to spend time writing our personal facet logic. What additionally caught us off-guard was that even newer Java options, akin to Non-compulsory, could cause schema evolution to not work

- We wished to leverage the published operator to simplify configuration administration. Nevertheless, since enter streams from different sources may fireplace independently, we ended up not utilizing this resolution. It could be good to have a signaling mechanism amongst operators.

- Through the years, we’ve hit fairly a couple of stability points when working with Zookeeper and Kafka which turned out to be reputable bugs of their codebase. Most of them have now been mounted however we’ve needed to face a variety of manufacturing points and constructed fast workarounds within the meantime.

MoEngage continuously strives to make enhancements to the platform that helps manufacturers ship seamless experiences to their prospects

Future Enhancements

There are a number of enhancements that we plan to work on within the coming months, a few of that are:

- We’re now at a stage the place we’re satisfied that we’ve hit the boundaries of MongoDB and, after a couple of years, might want to discover an alternate retailer for consumer and gadget properties that may help a lot increased write throughput whereas MongoDB itself could be leveraged for its indexes

- Flink’s checkpoint mechanism requires the job to be a directed acyclic graph. This makes it unimaginable to trade state inside sub-task of the identical operators. Whereas that is very effectively documented, it’s a function that we want, and we are going to discover Flink’s sibling venture Stateful Capabilities, which doesn’t have this limitation

- Flink’s not too long ago launched Kubernetes operator can deal with the whole software lifecycle, which supplies higher management over checkpoints and savepoints than our personal in-house developed resolution, and we plan to modify it sometime

- The usage of Kafka makes it tough to implement rate-limiting insurance policies as we’ve hundreds of shoppers whose information is distributed among the many partitions of a subject and Kafka itself can’t help one subject per shopper at our scale. We are going to discover alternate pub-sub layers, akin to Pulsar and Pravega, that supply better flexibility on this regard

- We thought of leveraging OpenTelemetry for end-to-end metrics and log-based monitoring and distributed tracing throughout providers; nonetheless, it has solely not too long ago moved out of alpha. We are going to discover this additional as an end-to-end monitoring resolution

Conclusion

We got down to guarantee real-time ingestion of our prospects’ information always at a scale that exposes the issues of the most effective open-source frameworks. It was an amazing problem to have the ability to be taught and turn out to be adept at a number of languages and frameworks, and we’ve totally loved knocking them off one after the other! For those who’re taken with fixing related issues, take a look at our present openings within the Engineering crew at MoEngage!

The put up How MoEngage Ensures Actual-time Information Ingestion for Its Prospects appeared first on MoEngage.