Gathering knowledge in your website’s efficiency is step one towards delivering a fantastic person expertise. Through the years, Google has offered varied instruments to evaluate and report on net efficiency.

Amongst them are Core Net Vitals, a set of efficiency indicators that Google deems essential to all net experiences.

This text covers the present set of Core Net Vitals and key suggestions and instruments to enhance your net efficiency to ship a very good web page expertise for customers.

A have a look at the evolution of net efficiency

Gone are the times when enhancing website efficiency was simple.

Prior to now, bloated sources and laggy connections typically held up web sites. However you would outperform opponents by compressing some pictures, enabling textual content compression or minifying your model sheets and JavaScript modules.

As we speak, connection speeds are sooner. Most sources are compressed by default and plenty of plugins deal with picture compression, cache deployment, and so on.

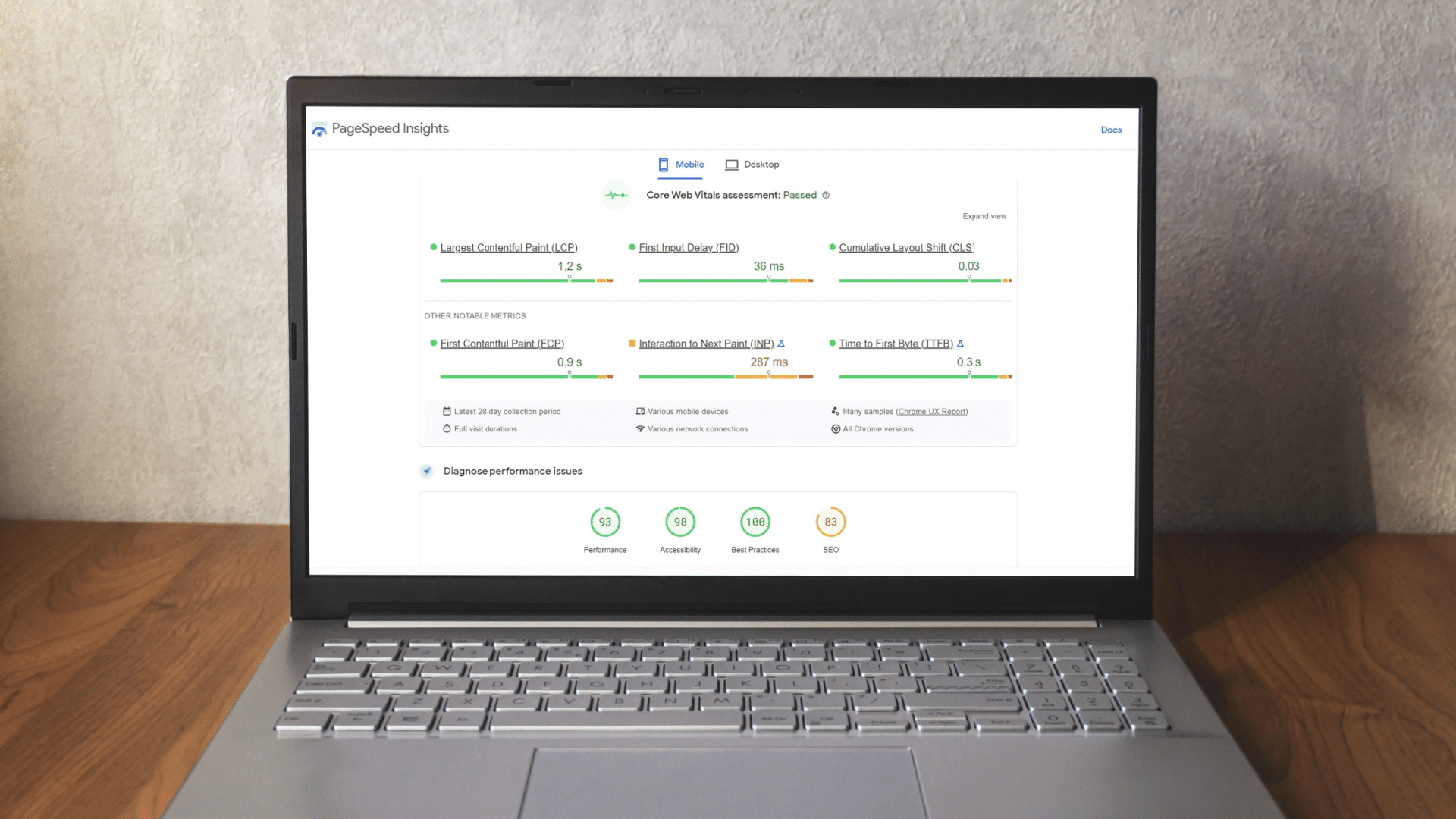

Google’s quest for a sooner net persists. PageSpeed Insights (PSI) remains to be dwell on net.dev, serving as the perfect instrument to guage particular person web page masses.

Whereas many really feel that PSI rankings are unnecessarily punitive, it’s nonetheless the closest we are able to get to how Google would possibly weigh and rank websites by way of web page velocity indicators.

To cross the newest iteration of Google’s web page velocity check, you’ll have to fulfill the Core Net Vitals Evaluation.

Understanding the Core Net Vitals

Core Net Vitals are a set of metrics built-in into the broader web page expertise search indicators launched in 2021. Every metric “represents a definite aspect of the person expertise, is measurable within the discipline, and displays the real-world expertise of a essential user-centric consequence,” in keeping with Google.

The present set of Core Net Vitals metrics embody:

Net.dev explains how every metric works as follows.

First Contentful Paint (FCP)

“The First Contentful Paint (FCP) metric measures the time from when the web page begins loading to when any a part of the web page’s content material is rendered on the display. For this metric, “content material” refers to textual content, pictures (together with background pictures),

<svg>parts, or non-white<canvas>parts.”

What this implies for technical SEOs

FCP is pretty simple to grasp. As a webpage masses, sure parts arrive (or “are painted”) earlier than others. On this context, “portray” means on-screen rendering.

As soon as any a part of the web page has been rendered – let’s say the principle nav bar masses in earlier than different parts – the FCP might be logged at that time.

Consider it as how shortly the web page begins visibly loading for customers. The web page load received’t be full, however it’s going to have began.

First Enter Delay (FID)

“FID measures the time from when a person first interacts with a web page (that’s, after they click on a hyperlink, faucet on a button, or use a customized, JavaScript-powered management) to the time when the browser is definitely in a position to start processing occasion handlers in response to that interplay.”

What this implies for technical SEOs

FID is a person interplay responsiveness metric set to get replaced by Interplay to Subsequent Paint (INP) in March 2024.

If a person interacts with an on-page factor (i.e., a hyperlink, sorting a desk, or making use of faceted navigation), how lengthy will it take for the location to start processing that request?

Interplay to Subsequent Paint (INP)

“INP is a metric that assesses a web page’s general responsiveness to person interactions by observing the latency of all click on, faucet, and keyboard interactions that happen all through the lifespan of a person’s go to to a web page. The ultimate INP worth is the longest interplay noticed, ignoring outliers.”

What this implies for technical SEOs

As talked about, INP will exchange FID as a Core Net Important in March 2024.

INP elements deeper info (apparently stretching again to the keyboard) and is probably going extra detailed and complex.

Time to First Byte (TTFB)

“TTFB is a metric that measures the time between the request for a useful resource and when the primary byte of a response begins to reach.”

What this implies for technical SEOs

As soon as a “useful resource” (i.e., embedded picture, JavaScript module, CSS stylesheet, and so on.) is requested, how lengthy will it take for the location to start delivering that useful resource?

Let’s say you go to a webpage, and on that web page is an embedded picture. It begins to load however hasn’t completed loading but. How lengthy till the very first byte of that picture is delivered from server to shopper (net browser)?

Largest Contentful Paint (LCP)

“The Largest Contentful Paint (LCP) metric studies the render time of the biggest picture or textual content block seen inside the viewport, relative to when the web page first began loading.”

What this implies for technical SEOs

LCP is among the most essential metrics but the trickiest to fulfill.

As soon as the biggest chunk of visible media (i.e., textual content or picture) has loaded, the LCP is logged.

You possibly can learn this as, how lengthy does it take for the huge bulk of a web page’s fundamental content material to load?

Perhaps there are nonetheless little bits loading additional down the web page, and issues that the majority customers received’t discover.

However, by the point the LCP is logged, the massive and apparent chunk of your web page has loaded. If it takes too lengthy for this to happen, you’ll fail the LCP test.

Cumulative Structure Shift (CLS)

“CLS is a measure of the biggest burst of format shift scores for each surprising format shift that happens throughout the complete lifespan of a web page.

A format shift happens any time a visual factor modifications its place from one rendered body to the subsequent. (See beneath for particulars on how particular person format shift scores are calculated.)

A burst of format shifts, often known as a session window, is when a number of particular person format shifts happen in fast succession with lower than 1-second in between every shift and a most of 5 seconds for the full window period.

The most important burst is the session window with the utmost cumulative rating of all format shifts inside that window.”

What this implies for technical SEOs

Again within the day, when web page velocity optimization was less complicated, many website homeowners realized they may obtain extremely excessive web page velocity rankings by merely deferring all the render-blocking sources (generally, CSS sheets and JavaScript modules).

This was nice at rushing up web page masses however made the online a extra glitchy and annoying navigation expertise.

In case your CSS – which controls all of the styling of your web page – is deferred, then the contents of the web page can load earlier than the CSS guidelines are utilized.

Which means the contents of your web page will load unstyled, after which soar a couple of bit because the CSS masses in.

That is actually annoying when you load a web page and click on on a hyperlink, however then the hyperlink jumps and also you click on on the flawed hyperlink.

Should you’re a bit OCD like me, such experiences are completely infuriating (regardless that they solely value seconds of time).

As a result of website homeowners making an attempt to “recreation” web page velocity rankings by deferring all sources, Google wanted a counter-metric, which might offset all of the web page velocity features in opposition to the person expertise deficit.

Enter Cumulative Structure Shift (CLS). That is one tough buyer, who’s out to smash your day when you attempt to broad-brush apply web page velocity boosts with out considering of your customers.

CLS will mainly analyze your web page masses for glitchy shifts and delayed CSS guidelines.

If there are too many, you’ll fail the Core Net Vitals evaluation regardless of having glad all speed-related metrics.

Assessing your Core Net Vitals for higher UX and web optimization outcomes

Probably the greatest methods to research a single webpage’s efficiency is to load it into PageSpeed Insights. The view is break up into a mix of:

- URL-level knowledge.

- Origin (domain-level) knowledge.

- Lab knowledge.

- Discipline knowledge.

To make sense of this, we have to have a look at an instance:

https://pagespeed.net.dev/evaluation/https-techcrunch-com/zo8d0t4x1p?form_factor=cellular

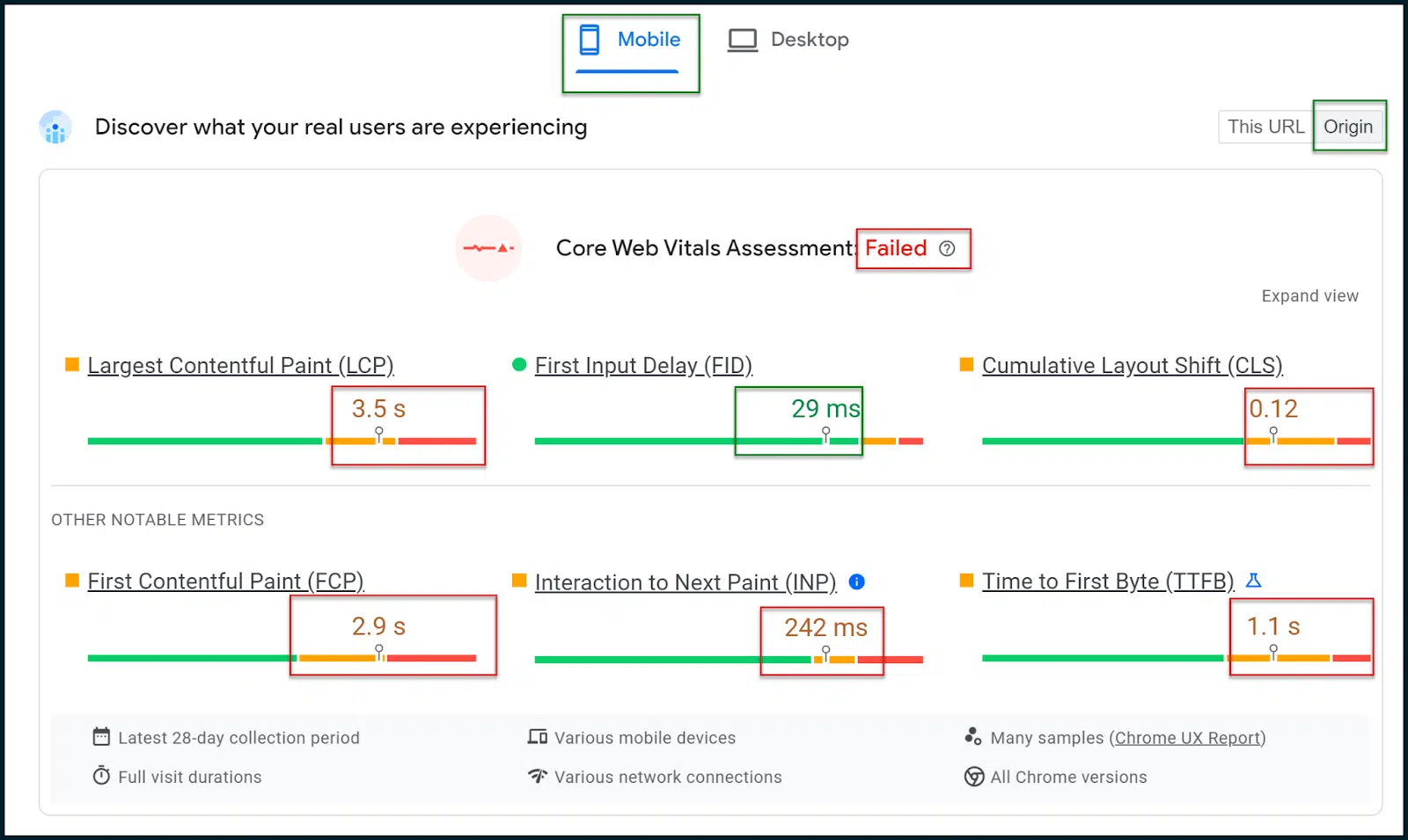

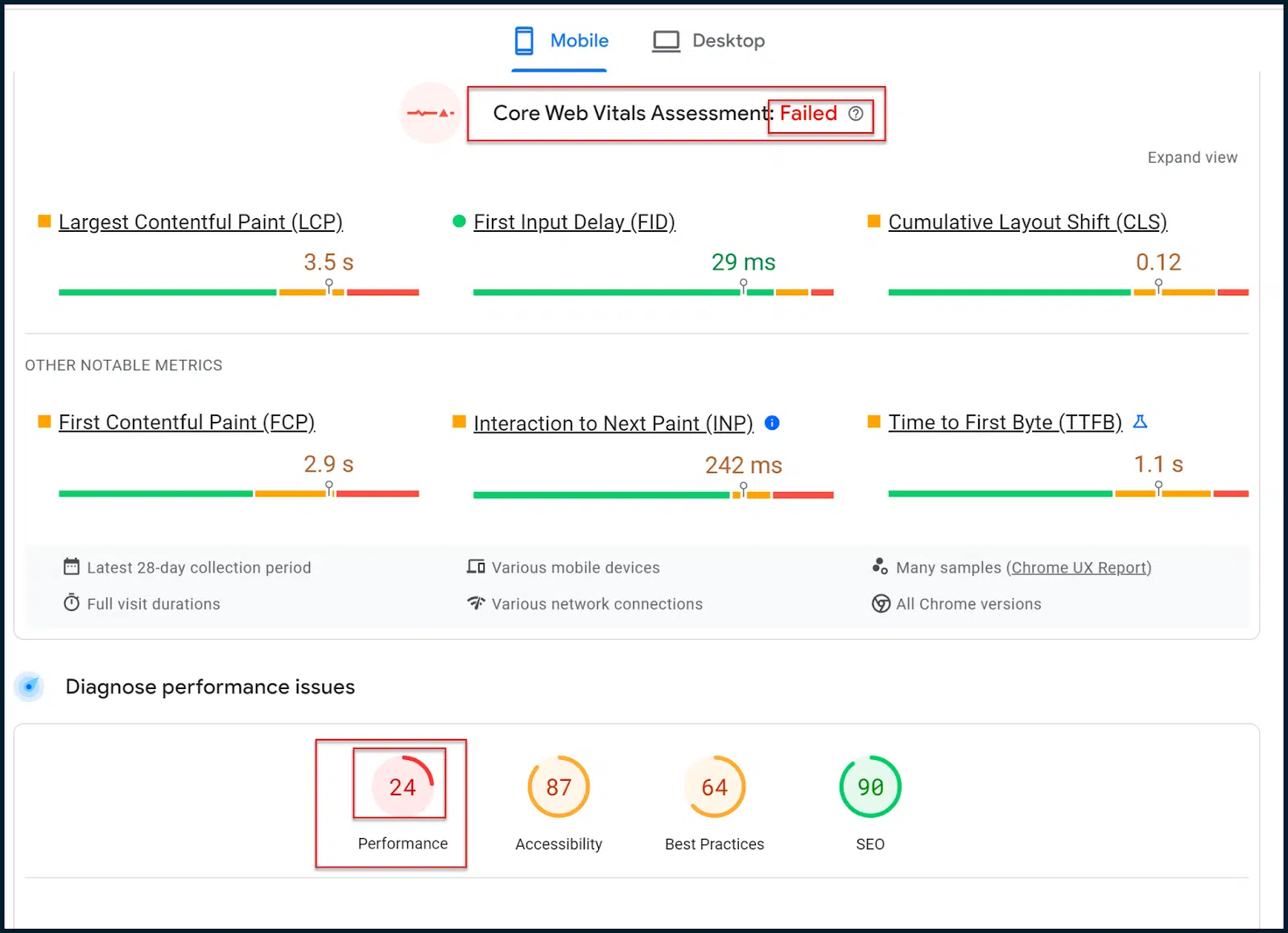

Right here, we are able to see the web page velocity rankings and metrics for the TechCrunch homepage.

Above, you possibly can see that the Core Net Vitals Evaluation has failed.

In a mobile-first net, it’s essential to pick the Cellular outcomes tab, which ought to be rendered by default (these are the outcomes that basically matter).

Choose the Origin toggle so that you see normal knowledge averaged throughout your website’s area relatively than simply the homepage (or whichever web page you place in to scan).

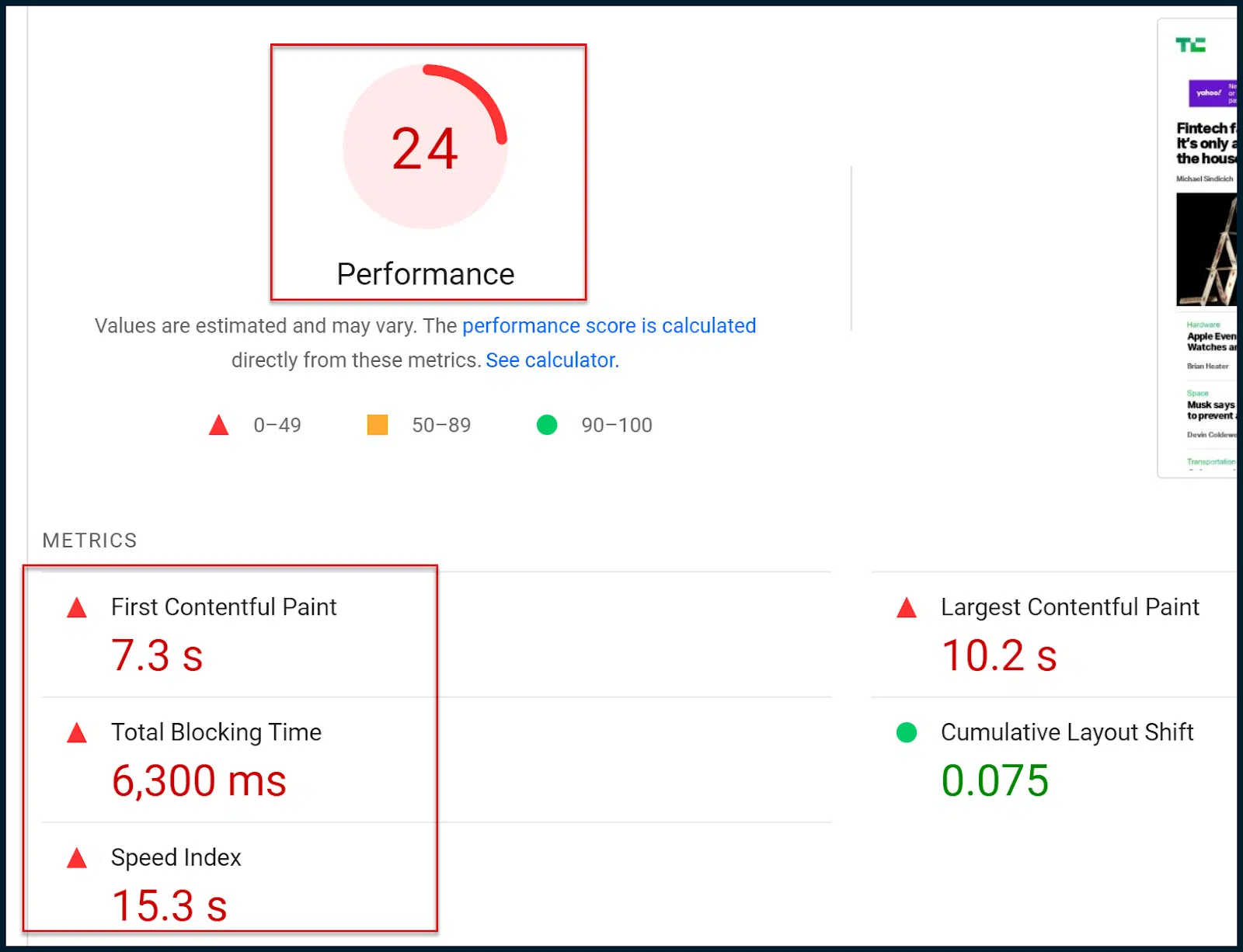

Additional down the web page, you will notice the outdated, acquainted numeric web page velocity score:

So, what’s the distinction between the brand new Core Net Vitals evaluation and the outdated web page velocity score?

Basically, the brand new Core Net Vitals evaluation (Go / Fail) is predicated on discipline (actual person) knowledge.

The outdated numeric score is predicated on simulated cellular crawls and lab knowledge, that are solely estimates.

Basically, Google has shifted to the Core Net Vitals evaluation by way of modifying search rankings.

To be clear, the simulated lab knowledge can provide a pleasant breakdown by way of what’s going flawed, however Google doesn’t make the most of that numeric score inside their rating algorithms.

Conversely, the Core Net Vitals evaluation doesn’t supply a lot granular info. Nevertheless, this evaluation is factored into Google’s rating algorithms.

So, your fundamental purpose is to make use of the richer lab diagnostics so that you simply ultimately cross the Core Net Vitals evaluation (derived by way of discipline knowledge).

Keep in mind that if you make modifications to your website, whereas the numeric score could instantly observe modifications, you’ll have to attend for Google to drag extra discipline knowledge earlier than you possibly can ultimately cross the Core Net Vitals evaluation.

You’ll notice that each the Core Net Vitals evaluation and the outdated web page velocity score make the most of a few of the identical metrics.

For instance, each of them reference First Contentful Paint (FCP), Largest Contentful Paint (LCP) and Cumulative Structure Shift (CLS).

In a means, the varieties of metrics examined by every score system are pretty related. It’s the extent of element and the supply of the examined knowledge, which is completely different.

It’s essential to purpose to cross the field-based Core Net Vitals evaluation. Nevertheless, for the reason that knowledge shouldn’t be too wealthy, it’s possible you’ll want to leverage the normal lab knowledge and diagnostics to progress.

The hope is which you could cross the Core Net Vitals evaluation by addressing the lab alternatives and diagnostics. However do keep in mind, these two assessments should not intrinsically linked.

Get the day by day e-newsletter search entrepreneurs depend on.

Assessing your CWVs by way of PageSpeed Insights

Now that you realize the principle Core Net Vitals metrics and the way they’ll technically be glad, it’s time to run by means of an instance.

Let’s return to our examination of TechCrunch:

https://pagespeed.net.dev/evaluation/https-techcrunch-com/zo8d0t4x1p?form_factor=cellular

Right here, FID is glad, and INP solely fails by a slender margin.

CLS has some points, however the principle issues are with LCP and FCP.

Let’s see what PageSpeed Insights has to say by way of Alternatives and Diagnostics.

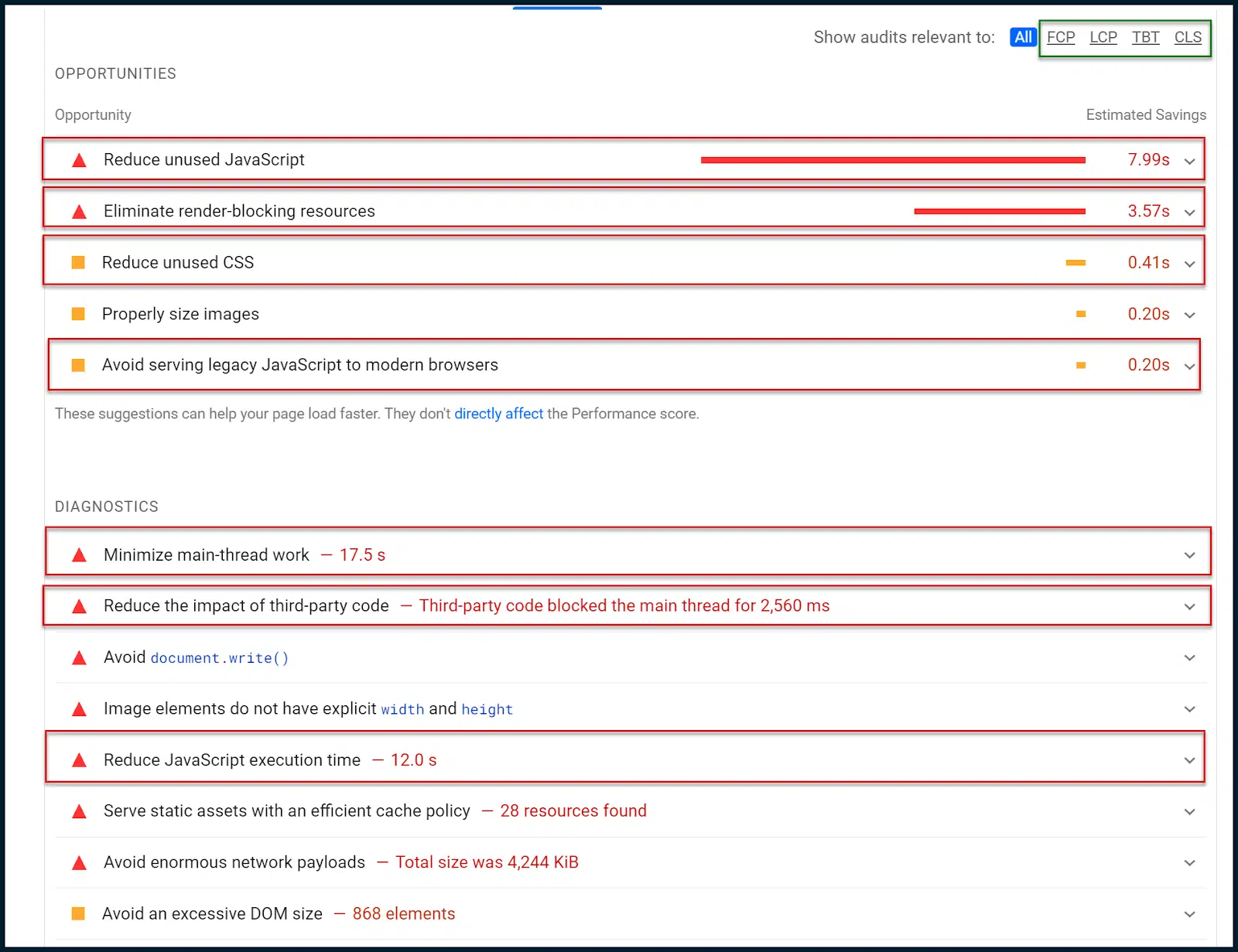

We should now shift over from the sphere knowledge to the lab knowledge and try and isolate any patterns that could be impacting the Core Net Vitals:

Above, you possibly can see a small sub-navigation within the higher proper nook boxed in inexperienced.

You need to use this to slender the completely different alternatives and diagnostics to sure Core Net Vitals metrics.

On this case, nonetheless, the information tells a really clear story with out narrowing.

Firstly, we’re advised to cut back unused JavaScript. Which means generally, JavaScript is being loaded with out being executed.

There are additionally notes to cut back unused CSS. In different phrases, some CSS styling is loading, which isn’t being utilized (related downside).

We’re additionally advised to remove render-blocking sources, that are virtually at all times associated to JavaScript modules and CSS sheets.

Render-blocking sources have to be deferred to cease them from blocking a web page load. Nevertheless, as we have now already explored, this may increasingly disrupt the CLS score.

As a result of this, it will be smart to start crafting each a essential CSS and a essential JavaScript rendering path. Doing this can inline JavaScript and CSS wanted above the fold whereas deferring the remainder.

This method permits the location proprietor to fulfill web page loading calls for whereas balancing with the CLS metric. It’s not a straightforward factor to do and normally requires a senior net developer.

Since we additionally discovered unused CSS and JavaScript, we are able to additionally challenge a normal JavaScript code audit to see if JavaScript may very well be deployed extra intelligently.

Let’s return to Alternatives and Diagnostics:

Now, we need to concentrate on the diagnostics. Google intentionally throttles these checks by means of poor 4G connections, so gadgets such because the main-thread work appear so lengthy (17 seconds).

That is deliberate with a purpose to fulfill customers with low bandwidth and/or gradual gadgets that are widespread worldwide.

I need to draw your consideration right here to “Decrease main-thread work.” This single entry is usually a goldmine of insights.

By default, most of a webpage’s rendering and script execution (JavaScript) duties are pushed by means of a shopper’s net browser’s fundamental processing thread (one single processing thread). You possibly can perceive how this causes important web page loading bottlenecks.

Even when your whole JavaScript is completely minified and shipped to the person’s browser shortly, it should wait in a single thread processing queue by default, which means that just one script could be executed without delay.

So, shortly delivery a great deal of JavaScript to your person is the equal of firing a firehose at a brick wall with a one-centimeter hole.

Good job delivering, nevertheless it’s not all going to undergo!

An increasing number of, Google is pushing client-side velocity responsiveness as our duty. Prefer it or lump it, that’s how it’s (so that you’d higher get acquainted).

You would possibly say in frustration, “Why is it like this!? Net browsers have had entry to a number of processing threads for years, even cellular browsers have caught up. There’s no want for issues to be this awkward, is there?”

Truly, sure. Some scripts depend on the output of different scripts earlier than they themselves can execute.

In all probability, if all browsers had been instantly to start out processing all JavaScript in parallel, out of sequence, many of the net would in all probability crash and burn.

So, there’s a very good motive that sequential script execution is the default habits for contemporary net browsers. I maintain emphasizing the phrase “default.” Why is that?

It’s as a result of there are different choices. One is to stop the shopper’s browser from processing any scripts by processing them on the person’s behalf. This is called server-side rendering (SSR).

It’s a robust instrument to detangle client-side JavaScript execution knots but in addition very costly.

Your server should course of all script requests (from all customers) sooner than your common person’s browser processes a single script. Let that one sink in for a second.

Not a fan of that possibility? OK, let’s discover JavaScript parallelization. The essential thought is to leverage net staff to outline which scripts will load in sequence vs. which can load in parallel.

When you can drive JavaScript to load in parallel, doing this by default is extraordinarily inadvisable. Integrating expertise reminiscent of this could largely mitigate the necessity for SSR typically.

Nevertheless, will probably be very fiddly to implement and would require (you guessed it!) the time of a senior net developer.

The identical man you rent to do your full JavaScript code audit would possibly give you the chance that will help you with this, too. Should you mix JavaScript parallelization with a essential JavaScript rendering path, then you definitely’re actually flying.

On this instance, right here’s the actually fascinating factor:

You possibly can instantly see that while the principle thread is occupied for 17 seconds, JavaScript execution accounts for 12 seconds.

Does that imply 12 seconds of the 17 seconds of thread work are JavaScript execution? That’s extremely possible.

We all know that every one JavaScript is pushed by means of the principle thread by default.

That’s additionally how WordPress, the energetic CMS, is about up by default.

Since this website is operating WordPress, all of these 12 seconds of JavaScript execution time possible come out of the 17 seconds of fundamental thread work.

That’s a fantastic perception as a result of it tells us that many of the fundamental processing thread’s time is spent executing JavaScript. And looking out on the variety of referenced scripts, that’s not arduous to imagine.

It is time to get technical and take away the coaching wheels.

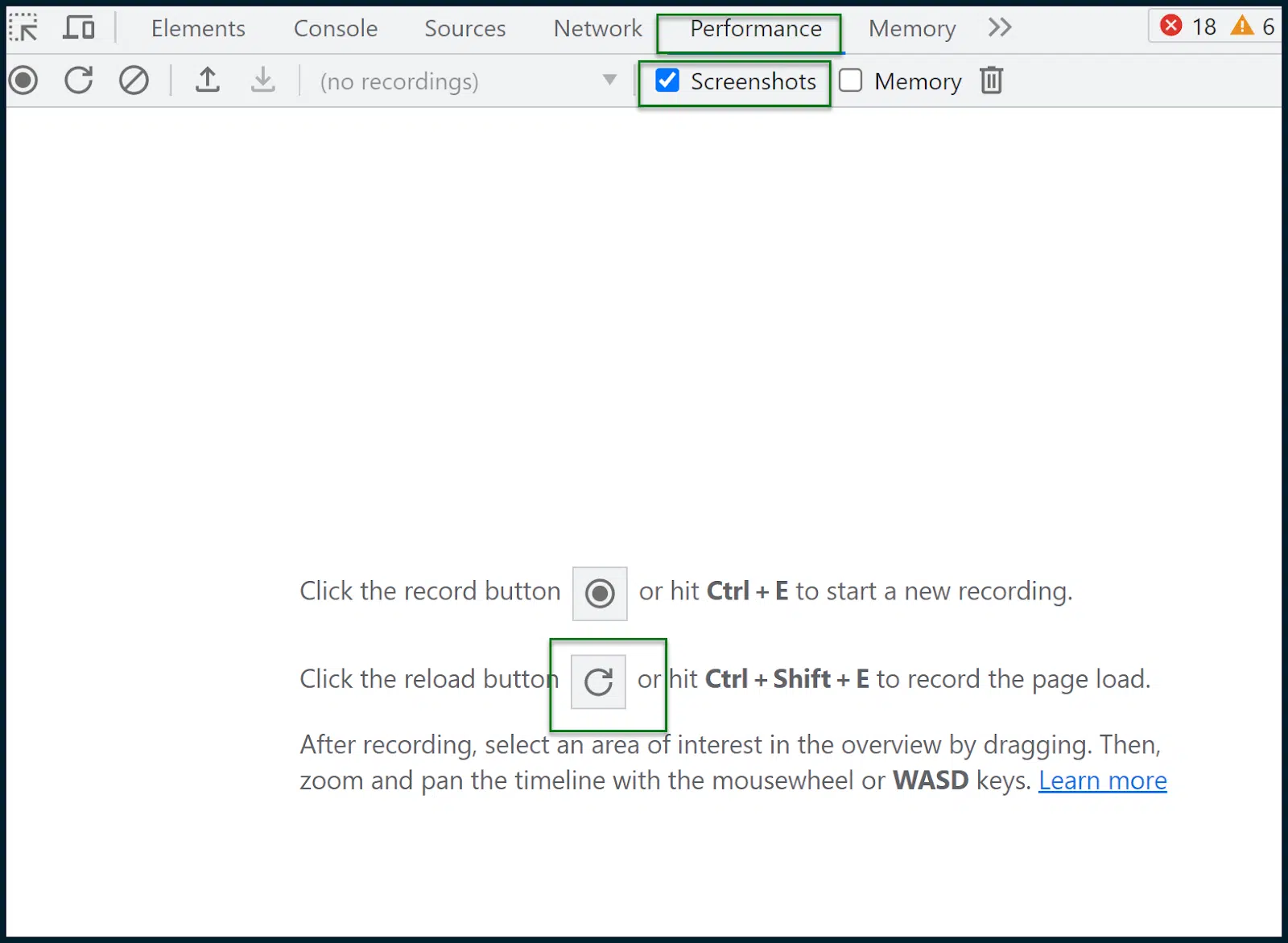

Open a brand new occasion of Chrome. You must open a visitor profile to make sure there isn’t any litter or enabled plugins to bloat our findings.

Keep in mind: carry out these actions from a clear visitor Chrome profile.

Load up the location you need to analyze. In our case, that’s TechCrunch.

Settle for cookies as wanted. As soon as the web page is loaded, open Chrome DevTools (right-click a web page and choose Examine).

Navigate to Efficiency > Screenshots.

Hit the reload button to document the web page load. A report will then be generated:

That is the place all of us have to take a deep breath and take a look at to not panic.

Above, boxed in inexperienced, you possibly can see a skinny pane that illustrates requests over time.

Inside this field, you possibly can drag your mouse to pick a time slice, and the remainder of the web page and evaluation will mechanically adapt.

The area I’ve chosen manually is the realm coated with a semi-transparent blue field.

That’s the place the principle web page load occurs and what I’m all for analyzing.

On this case, I’ve roughly chosen the vary of time and occasions between 32ms and a pair of.97 seconds. Let’s focus our gaze on the inside of the principle thread:

You know the way earlier, I used to be saying that the majority rendering duties and JavaScript executions are compelled by means of the bottleneck of the principle thread?

Effectively, we’re now wanting on the inside of that fundamental thread over time. And sure, in yellow, you possibly can see a number of scripting duties.

On the highest couple of rows, as time progresses, there are increasingly darkish yellow chunks confirming all of the executing scripts and the way lengthy they take to course of. You possibly can click on on particular person bar chunks to get a readout for every merchandise.

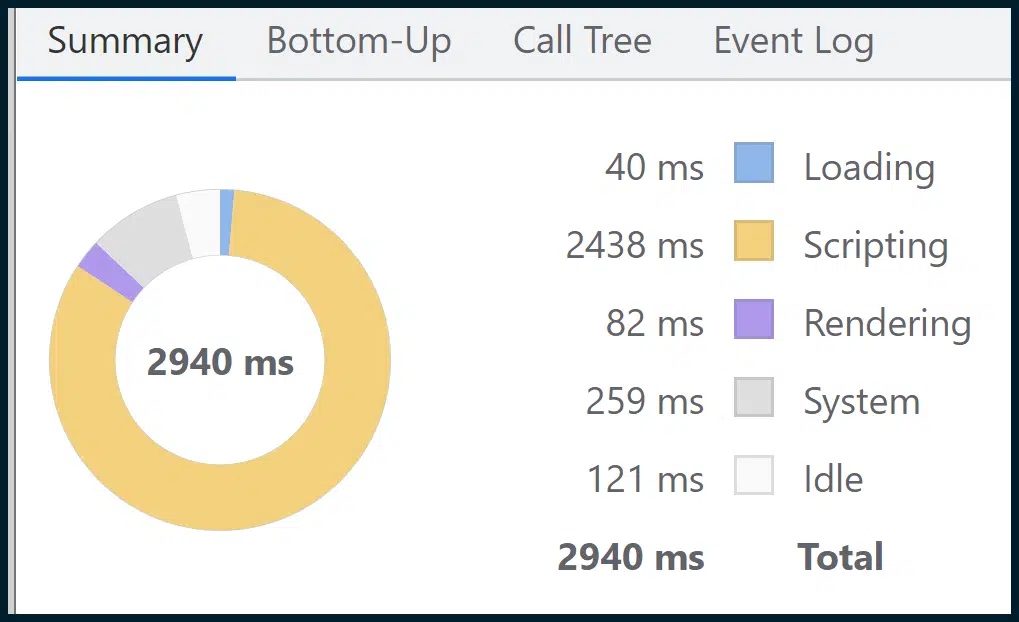

Though this can be a highly effective visible, you’ll discover a extra highly effective one within the Abstract part:

This sums up all of the granular knowledge, damaged down into easy thematic sections (e.g., Scripting, Loading, Rendering) by way of the easy-to-digest visible medium of a doughnut chart.

As you possibly can see, scripting (script execution) takes up many of the web page load. So, our earlier supposition from Google’s mixture of discipline and lab knowledge, which pointed the finger at JavaScript execution bottlenecks in the principle thread, appears to have been correct.

In 2023, this is among the most generally encountered points, with few easy, off-the-shelf options.

It’s complicated to create essential JavaScript rendering paths. It takes experience to carry out JavaScript code audits, and it isn’t so easy to undertake JavaScript parallelization or SSR.

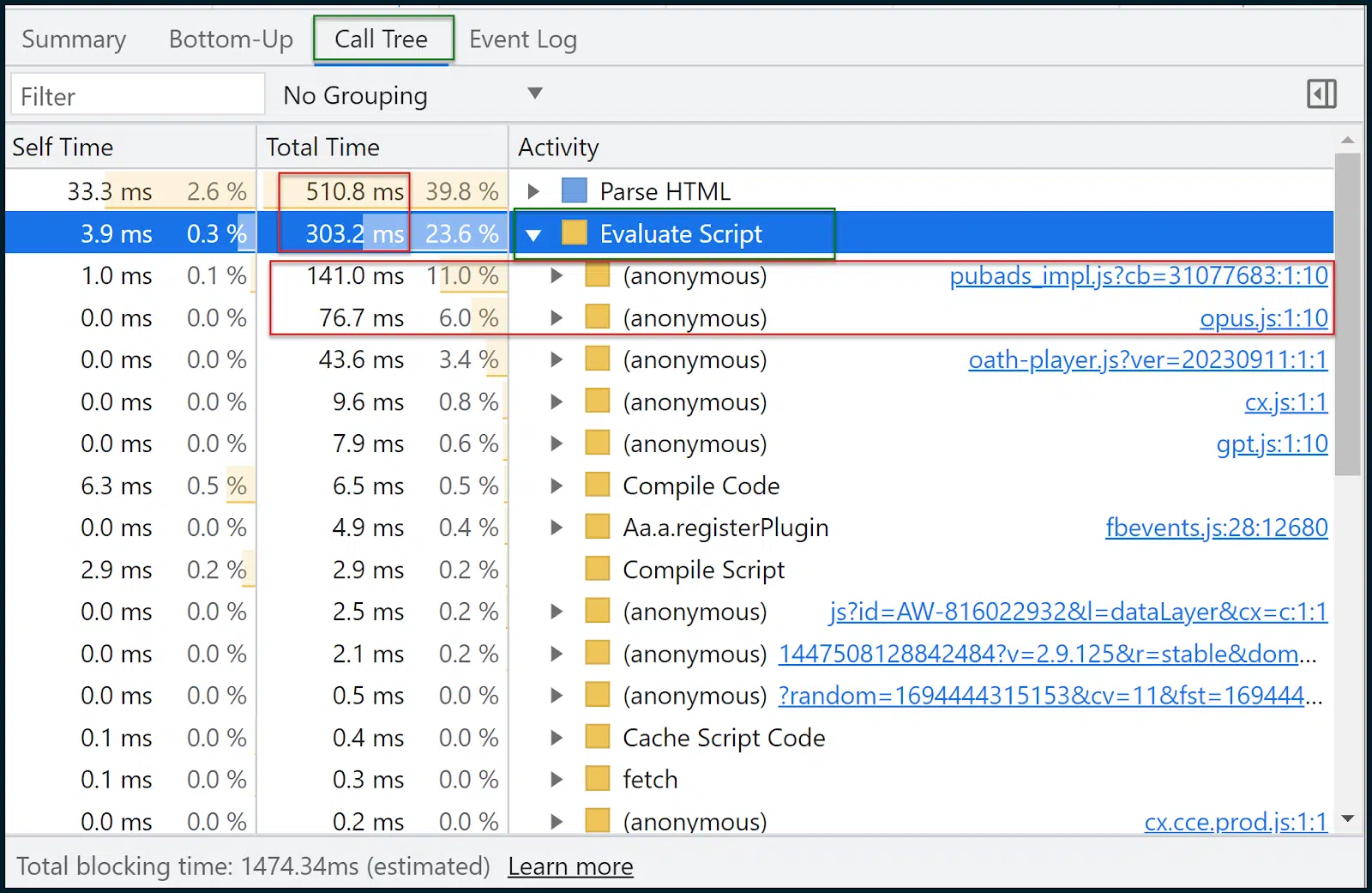

Now let’s go and have a look at the Name Tree:

Name Tree is usually extra helpful than Backside-Up.

The information is comparable, however Name Tree will thematically group duties into helpful buckets like Consider Script (script execution).

You possibly can then click on on a bunch, broaden it and see the scripts and the way lengthy they took to load. 11% of the time was taken loading pubads_impl.jsm whereas 6% of the time was taken loading opus.js.

I don’t know what these modules are (and it’s possible you’ll not both), however that is the place the optimization journey typically begins.

We are able to now take a step again to:

- Google these scripts and see if they’re a part of third-party libraries, what they do, and what the impression is.

- Seek the advice of the developer by way of how these may be extra intelligently deployed.

- Slim the issue right down to particular person sources and search for options.

- Deal with the efficiency deficit (or alternatively, combat for extra sources/bandwidth, a robust internet hosting setting – if that’s certainly required).

Should you managed to stay with me this far, congratulations. When it comes to deep Core Net Vitals and web page velocity evaluation, we solely used:

Sure, you actually could be simply that lean. Nevertheless, there are different instruments which can be of immense help to you:

- GTMetrix: Particularly helpful for its waterfall chart (requires a free account for waterfall), which you’ll learn to learn right here. Do not forget that GTMetrix will run unthrottled by default, giving overly favorable outcomes. Remember to set it to an LTE connection.

- Google Search Console: Should you set this up and confirm your website, you possibly can see plenty of efficiency and value knowledge over time, together with Core Net Vitals metrics throughout a number of pages (aggregated).

- Screaming Frog web optimization Spider: This may be linked to the web page velocity API, to permit bulk fetching of Core Net Vitals Go or Fail grades (for a number of pages). Should you’re utilizing the free web page velocity API, don’t hammer it in an unreasonable means

Enhancing your web page velocity rankings was once so simple as compressing and importing some pictures. These days? It’s a posh Core Net Vitals campaign. Put together to interact absolutely. Something much less will meet with failure.

Opinions expressed on this article are these of the visitor writer and never essentially Search Engine Land. Workers authors are listed right here.